Over the past week, we’ve explored the double-edged sword of AI—from the foundational risks that can cost millions to the complex regulations that govern its use. Now, we move from theory to practice. Understanding the risks and knowing the rules are the first two steps; building a resilient, enterprise-wide program is the critical third.

An AI compliance failure is rarely a technical problem; it’s a governance failure. The root cause is typically not a “bad algorithm” but a lack of strategic foresight—inadequate risk assessment, biased data, or a failure of human oversight.

This final guide provides an actionable blueprint for implementation. These are the concrete steps your organization can take to harness the power of AI safely, ethically, and profitably, transforming compliance from a bureaucratic hurdle into a strategic enabler of sustainable growth.

The Expanding Privacy Landscape: GDPR & CCPA

Beyond the industry-specific rules of HIPAA and SOX, broader data privacy laws grant individuals powerful rights that directly impact how AI systems can be deployed.

Navigating Data Subject Rights with GDPR

The EU’s General Data Protection Regulation (GDPR) is the most influential data privacy law in the world. Its extraterritorial reach applies to any organization processing the data of EU residents, and its rules directly regulate automated systems. Article 22 of the GDPR establishes a data subject’s right “not to be subject to a decision based solely on automated processing” for major life events like loan applications or job opportunities. Even when exceptions apply, an organization must provide for human intervention and allow the individual to contest the decision. This creates a de facto requirement for AI transparency and explainability.

California’s New Frontier: The CCPA’s Right to Opt-Out

The California Consumer Privacy Act (CCPA), expanded by the CPRA, is now pushing AI regulation further. The California Privacy Protection Agency (CPPA) has advanced draft regulations on Automated Decisionmaking Technology (ADMT) that could grant consumers the transformative right to opt out of a business’s use of ADMT for significant decisions. This is a much lower threshold than GDPR’s “solely automated” standard, as it also applies to AI that “substantially facilitates” human decision-making. At scale, this requirement to build and maintain a parallel “off-ramp” for California consumers could significantly erode the efficiency gains that AI systems were implemented to achieve, making upfront strategic planning absolutely critical.

The Global Rulebook for Trustworthy AI

As regulations evolve, a set of global standards and frameworks has emerged to provide a cohesive approach to AI governance. Adopting them is key to building a future-proof program.

- The EU AI Act: The Legal Mandate The world’s first comprehensive, binding law for AI, the EU AI Act establishes a risk-based approach. It classifies AI systems into a pyramid of risk, from “Unacceptable” systems that are banned (like social scoring) to “High-Risk” systems that require stringent compliance (like AI in hiring or credit scoring). Its rules effectively set a global benchmark for AI governance.

- The NIST AI RMF: The Practical Playbook While the EU AI Act provides the legal “why,” the AI Risk Management Framework (RMF) from the U.S. National Institute of Standards and Technology (NIST) provides the practical “how”. It is a voluntary framework structured around four core functions—Govern, Map, Measure, and Manage—that create a continuous cycle for identifying, assessing, and treating AI risks, uniting technical, legal, and executive teams under a shared process.

- ISO/IEC 42001: The Global Certification Published in late 2023, ISO/IEC 42001 is the world’s first international, certifiable standard for an Artificial Intelligence Management System (AIMS). While NIST provides the methodology, ISO 42001 provides the formal, auditable structure that proves an organization’s processes are robust and aligned with global best practices, serving as tangible, third-party validation of a commitment to responsible AI.

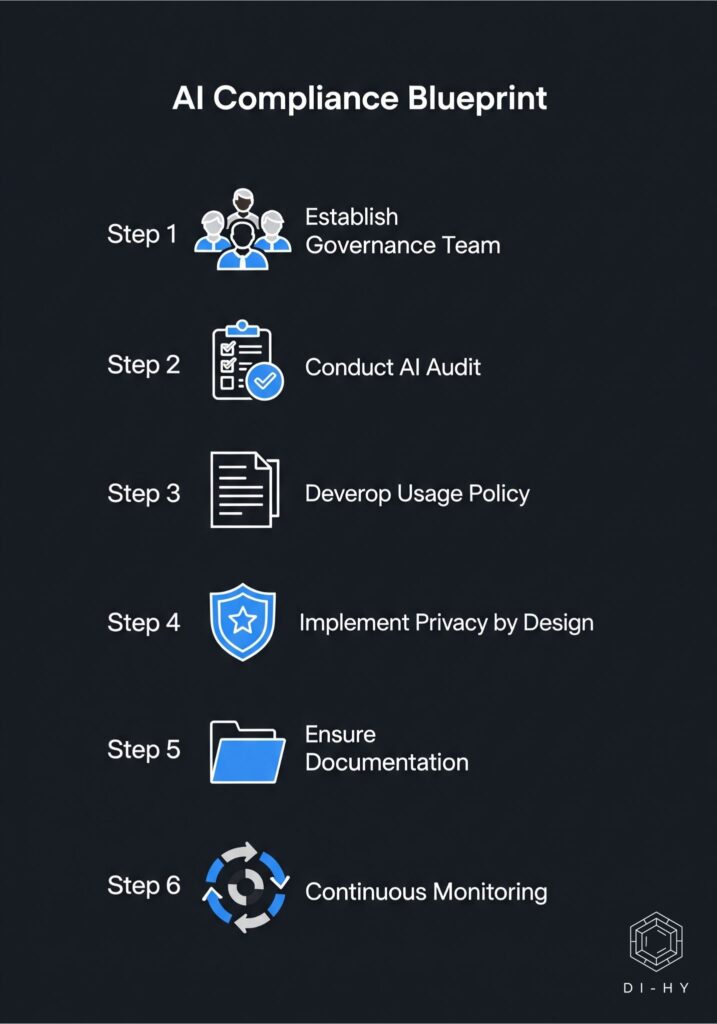

Your Step-by-Step AI Compliance Blueprint

Building a robust program requires a structured approach that integrates technology, policy, and people.

Establish an AI Governance Team A successful program cannot be run by a single department. It requires a cross-functional team with clear authority, including stakeholders from Legal, Data Science, IT/Cybersecurity, Business Leadership, and Ethics/HR. This team’s first task is to define roles and decision-making authority for the entire AI lifecycle.

Conduct a Comprehensive AI Audit & Risk Assessment You cannot govern what you cannot see. The next step is a thorough audit to create a complete inventory of all AI systems in use, including the “Shadow AI” tools used without approval. Once inventoried, use a framework like the NIST AI RMF to map potential risks and measure their impact.

Develop a Clear AI Usage Policy A formal, enforceable AI Usage Policy is essential to manage employee behavior. This policy should be a practical document that includes an explicit list of approved AI tools, clear rules defining what types of data can be used, and guidelines for verifying the accuracy of AI-generated content.

Implement “Privacy and Security by Design” Compliance cannot be an afterthought. The principles of privacy and security must be embedded into the AI lifecycle from the very beginning. This includes data minimization (using only necessary data), anonymization techniques, and robust technical controls like encryption and secure development practices.

Ensure Robust Documentation and Transparency To meet audit requirements and build trust, maintain meticulous documentation. This includes detailed records of each AI model’s purpose and training data, explanations of its decision-making logic, and immutable audit trails of all system activities.

Establish Continuous Monitoring and Improvement AI compliance is an ongoing process, not a one-time project. Establish a cycle of continuous monitoring to watch for performance degradation (“drift”) or the emergence of bias. Risk assessments must be regularly updated, and employee training must be ongoing to maintain a strong human firewall.

Conclusion: Your Partner for a Compliant Future

The era of AI presents a clear choice: treat compliance as a hurdle, or embrace it as a strategic enabler of trustworthy growth. A proactive strategy, built on global frameworks and a practical implementation blueprint, transforms governance from a cost center into a competitive advantage. It builds trust, empowers innovation, and creates a resilient organization.

This journey requires a rare blend of technical, legal, and strategic expertise. Ready to transform your business with AI while navigating the complexities of compliance? A robust strategy is your first and most critical step. Partner with the experts at di-hy.com. Our AI Strategy Consulting services provide a custom roadmap to ensure your AI initiatives are not only powerful but also compliant, secure, and aligned with your business goals.