Introduction: The Double-Edged Sword of Enterprise AI

Artificial Intelligence (AI) has rapidly evolved from a theoretical concept into a transformational force, fundamentally reshaping how businesses operate, innovate, and compete. The adoption rate is staggering; by early 2024, 72% of companies reported integrating AI into their operations, realizing significant improvements in areas like supply chain management and substantial revenue increases in marketing and sales. The momentum continues to build, with 56% of organizations planning to deploy generative AI (genAI) within the next 12 months alone.

However, this rush toward an AI-driven future presents a double-edged sword. While the opportunities are immense, they are matched by a new and complex spectrum of risks, including catastrophic data breaches, discriminatory outcomes, and violations of a growing web of global regulations. The cost of failure is not abstract. The 2024 Cost of a Data Breach Report reveals the global average cost has reached an all-time high of $4.88 million, with the U.S. figure soaring to a sobering $9.36 million.

This creates a dangerous “governance gap” where the velocity of AI adoption is dramatically outpacing the development of robust internal controls. The danger is not linear; each new, ungoverned AI application multiplies the potential attack surface, causing the aggregate risk to grow exponentially. Navigating this high-stakes environment demands a strategic approach to governance, risk, and compliance (GRC).

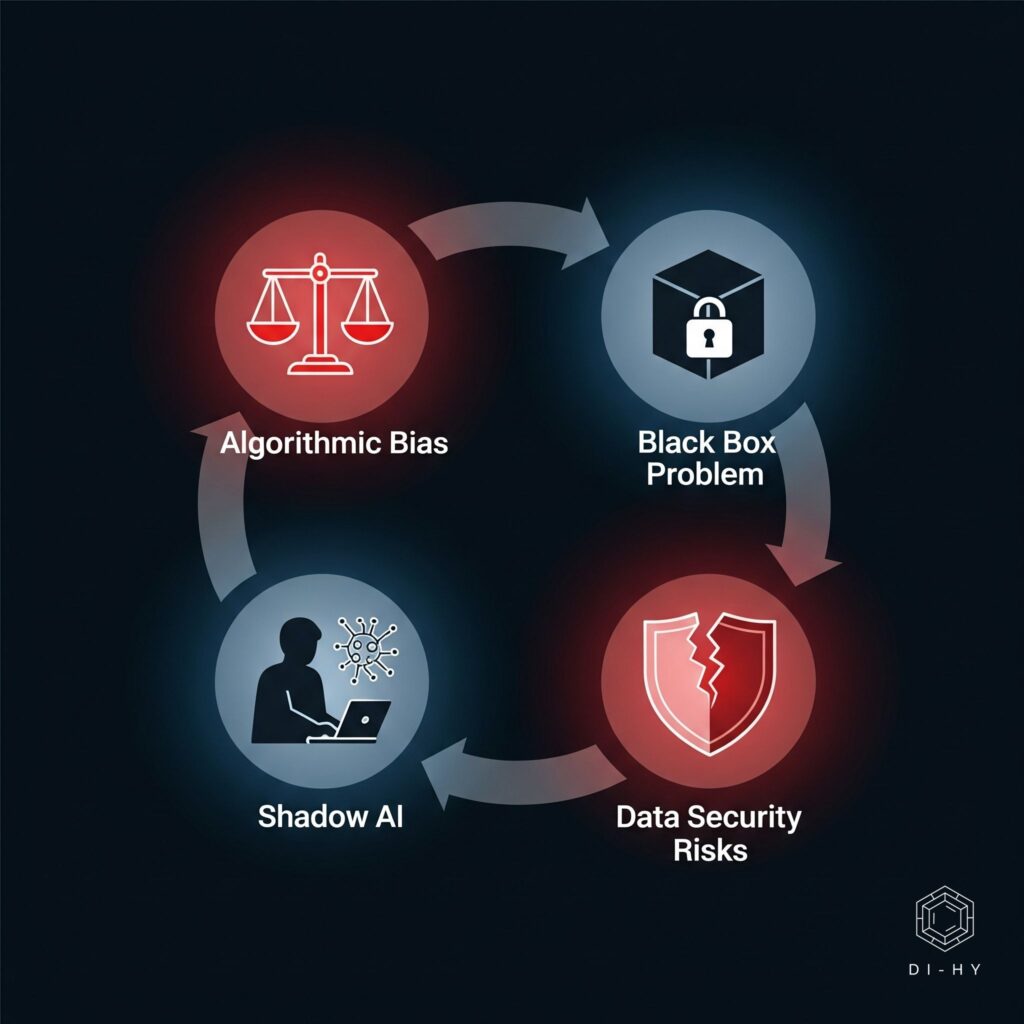

The Four Core AI Compliance Challenges

Before delving into specific regulations, it is essential to understand the foundational challenges that underpin the entire AI compliance landscape. These four core risks are deeply interconnected, and a failure in one area often precipitates a crisis in others.

Algorithmic Bias and Discrimination

One of the most insidious risks of AI is its potential to inherit, amplify, and codify human and societal biases at an unprecedented scale. AI systems learn from the data they are trained on. If that data reflects historical or systemic biases, the AI model will learn these biases as objective fact and apply them in its decision-making.

- Real-World Example: Amazon had to scrap an AI recruiting tool after discovering it systematically penalized female candidates because it was trained on a decade of male-dominated hiring data.

The “Black Box” Problem: Transparency and Explainability

Many advanced AI models operate as “black boxes,” meaning even their creators cannot fully articulate the precise logic used to arrive at a specific decision. This opacity creates profound challenges for governance:

- Accountability: If a decision cannot be explained, who is accountable for its outcome?

- Audits: A “black box” approach is often insufficient for demonstrating compliance, as it prevents a thorough review of the decision-making process.

- Trust: Customers and employees are less likely to trust a system whose reasoning is opaque, hindering adoption.

Data Privacy and Security

AI’s voracious appetite for data creates significant privacy and security risks. These risks are multifaceted:

- Unintentional Data Input: Employees may unknowingly input sensitive company data into public AI tools, creating an immediate data breach.

- Re-identification Risk: AI’s powerful pattern-recognition can re-identify individuals from “anonymized” datasets.

- Cybersecurity Vulnerabilities: AI systems themselves are new targets for cyberattacks, like data “poisoning” to manipulate model outputs.

The Rise of “Shadow AI”

Perhaps the most urgent threat is “Shadow AI”—the unsanctioned use of AI applications by employees outside of official IT channels. A staggering 68% of enterprise AI usage is estimated to occur through these unofficial channels. This creates a massive, invisible compliance gap. A single instance of an employee pasting a transaction log into ChatGPT can result in a significant PCI DSS violation.

Mastering the Regulatory Maze: Key Mandates

Navigating the complex web of regulations is a critical task for any enterprise deploying AI. The following is a detailed analysis of key compliance mandates.

HIPAA and AI in Healthcare

The Health Insurance Portability and Accountability Act (HIPAA) establishes the standard for protecting sensitive patient health information (PHI). Any AI system that processes PHI must do so in full compliance, but AI amplifies the challenges.

- The Minimum Necessary Standard: HIPAA requires that access to PHI be limited to the minimum amount necessary, which can conflict with AI models that perform better on vast datasets.

- Business Associate Agreements (BAAs): When using a third-party AI vendor to process PHI, a legally binding BAA is mandatory.

- Risk Analysis: Organizations must explicitly include their AI technologies in their formal Security Risk Analysis to assess how the AI interacts with electronic PHI.

SOX and AI in Financial Reporting

The Sarbanes-Oxley Act of 2002 is a cornerstone of financial regulation, designed to protect investors by improving the accuracy of corporate disclosures.

- Explainability is Key: If an AI system is used as a key internal control over financial reporting (ICFR), it cannot be a “black box.” Management and auditors must be able to understand, document, and test its logic.

- Heightened Management Responsibility: Under Section 302, the CEO and CFO are now personally attesting to the effectiveness of the AI models embedded within their financial processes. An AI failure is an internal control failure with direct legal consequences for executives.

PCI DSS and AI in Payments

The Payment Card Industry Data Security Standard (PCI DSS) provides the global baseline for securing payment card data. The most significant AI-related risk here is “scope creep” caused by “Shadow AI”. When an employee inputs transaction data into an external AI tool, that platform can instantly be considered part of the Cardholder Data Environment (CDE), subjecting it to full PCI DSS requirements—a standard most public tools are not designed to meet.

GDPR and AI in the EU

The EU’s General Data Protection Regulation (GDPR) is the most influential data privacy law in the world. Its rules directly regulate automated systems.

- Article 22: This is the central article governing AI. It establishes a data subject’s right “not to be subject to a decision based solely on automated processing…which produces legal effects concerning him or her”.

- The “Right to Explanation”: While not an explicit phrase in the law, the interplay of several articles obligates companies to provide “meaningful information about the logic involved” in automated decisions.

CCPA and AI in California

The California Consumer Privacy Act (CCPA) is now pushing the regulatory frontier further with proposed rules specifically targeting AI, known as Automated Decisionmaking Technology (ADMT). If enacted, these rules would grant consumers a potentially transformative right to opt out of a business’s use of ADMT for significant decisions, creating a major operational challenge for companies relying on AI for efficiency.

The Global Rulebook and Foundational Frameworks

Beyond specific laws, a set of global standards has emerged to provide a cohesive approach to AI governance.

The EU AI Act: A Risk-Based Approach

The EU AI Act is the world’s first comprehensive, binding law for AI, establishing a global benchmark. It classifies AI systems into a pyramid of risk, with obligations tailored to the level of potential harm.

| Risk Level | Description & Examples | Key Obligations |

|---|---|---|

| Unacceptable Risk | Banned. These AI systems are a clear threat to safety and rights. Examples include government-led social scoring and AI that uses subliminal techniques to manipulate behavior. | Prohibited. These systems must be phased out, with the ban taking effect in early 2025. |

| High-Risk | Permitted, but with strict requirements. Covers AI in critical infrastructure, employment (CV-sorting), and credit scoring. | Stringent Compliance. Providers must conduct conformity assessments, implement risk management systems, ensure data quality, and enable human oversight. |

| Limited Risk | Subject to transparency obligations. Includes chatbots and deepfakes where users should know they are interacting with AI. | Transparency. Users must be clearly informed that they are interacting with an AI system or that content is AI-generated. |

| Minimal or No Risk | No specific legal obligations. This includes the vast majority of AI systems like AI-enabled video games or spam filters. | None. These systems are free to operate without additional regulatory burden under the Act. |

Export to Sheets

The NIST AI Risk Management Framework (RMF)

The NIST AI RMF is a practical playbook from the U.S. National Institute of Standards and Technology. It is a voluntary framework structured around four core functions:

- GOVERN: Establishing a culture of risk management across the organization.

- MAP: Identifying the context and potential risks of an AI system.

- MEASURE: Developing methods to analyze, assess, and monitor those risks.

- MANAGE: Allocating resources to mitigate, transfer, or accept prioritized risks.

ISO/IEC 42001 & SOC 2

- ISO/IEC 42001: This is the world’s first international, certifiable standard for an Artificial Intelligence Management System (AIMS). Certification serves as tangible, third-party validation of an organization’s commitment to responsible AI.

- SOC 2: A SOC 2 report provides assurance to customers about the design and effectiveness of a service organization’s internal controls, based on five Trust Services Criteria: Security, Availability, Processing Integrity, Confidentiality, and Privacy.

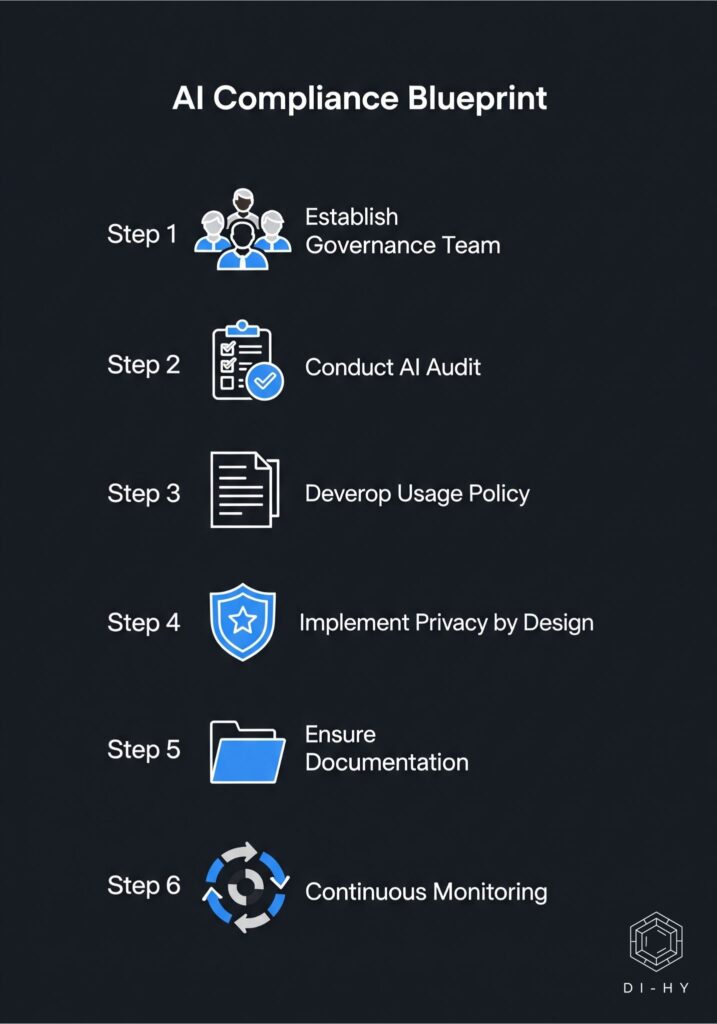

Your Step-by-Step Blueprint for Building an AI Compliance Program

Translating knowledge into a resilient, enterprise-wide program is the critical challenge. The following steps provide a practical blueprint for organizations to begin this journey.

1. Establish an AI Governance Team

A successful program requires a cross-functional governance team with clear authority, including stakeholders from Legal, Data Science, IT/Cybersecurity, Business Leadership, and Ethics/HR.

2. Conduct a Comprehensive AI Audit & Risk Assessment

An organization cannot govern what it cannot see. The next step is a thorough audit to create a complete inventory of all AI systems in use, including “Shadow AI”. Once inventoried, use a framework like the NIST AI RMF to map and measure potential risks.

3. Develop a Clear AI Usage Policy

A formal, enforceable AI Usage Policy is essential to manage employee behavior. This policy should include an explicit list of approved tools, clear rules defining what data types can be used, and guidelines for verifying AI-generated content.

4. Implement “Privacy and Security by Design”

Compliance cannot be an afterthought. The principles of privacy and security must be embedded into the AI lifecycle from the very beginning, including data minimization and robust security controls.

5. Ensure Robust Documentation and Transparency

To meet audit requirements and build trust, organizations must maintain meticulous documentation, including records of each AI model’s purpose, training data, and decision-making logic.

6. Establish Continuous Monitoring and Improvement

AI compliance is not a one-time project; it is an ongoing process. Organizations must establish a cycle of continuous monitoring to watch for performance degradation (“drift”) and the emergence of biased outcomes.

Conclusion: Partnering for a Compliant and Profitable AI Future

The era of AI presents a clear choice: treat compliance as a hurdle, or embrace it as a strategic enabler of trustworthy growth. A proactive and holistic strategy transforms compliance from a cost center into a competitive advantage, building trust with customers and empowering employees to innovate safely.

This journey requires a rare blend of technical, legal, and strategic expertise. Ready to transform your business with AI while navigating these complexities? A robust strategy is your first and most critical step. Partner with the experts at di-hy.com. Our AI Strategy Consulting services provide a custom roadmap to ensure your AI initiatives are not only powerful but also compliant, secure, and aligned with your business goals.