Artificial Intelligence (AI) has decisively moved from a theoretical concept to a transformational business force. The rate of adoption is staggering. By early 2024, an incredible 72% of companies reported using AI in their operations, with 56% planning to deploy generative AI within the next year alone. These technologies are unlocking monumental benefits, from hyper-personalized customer experiences to unprecedented gains in operational efficiency.

However, this rush toward an AI-driven future presents a formidable double-edged sword. The immense opportunities are matched by a new and complex spectrum of enterprise-level risks, including catastrophic data breaches, discriminatory outcomes driven by algorithmic bias, and violations of a growing web of global regulations.

The cost of failure is not abstract; it is quantifiable and rising to alarming levels. The 2024 Cost of a Data Breach Report reveals that the global average cost of a data breach has reached an all-time high of $4.88 million. For organizations in the United States, this figure is an even more sobering $9.36 million.

This reality has created a dangerous “governance gap,” where the velocity of AI adoption is dramatically outpacing the development of robust internal controls and risk management frameworks. The danger is not linear; each new, ungoverned AI application multiplies the potential attack surface, causing the aggregate risk to grow exponentially. Navigating this high-stakes environment requires a strategic, forward-thinking approach to governance, risk, and compliance (GRC).

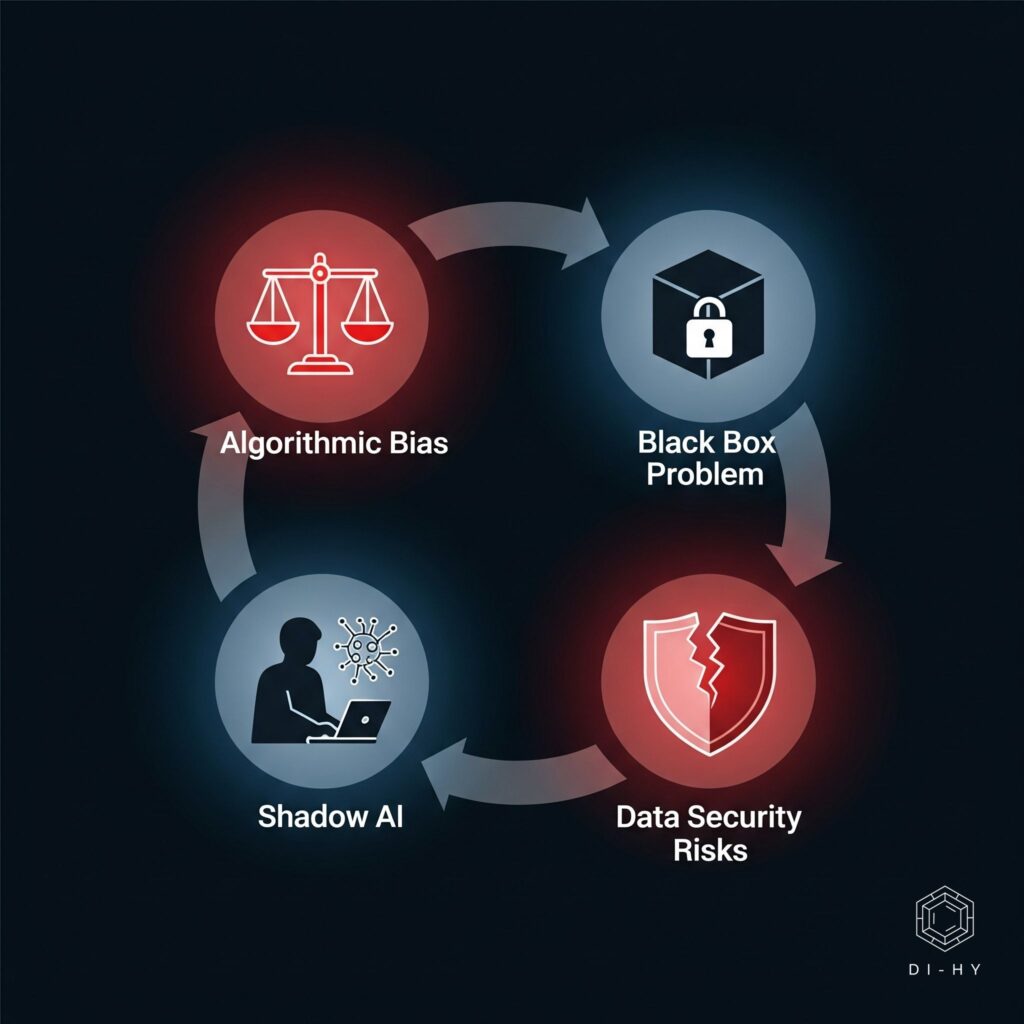

Before diving into specific regulations, it’s essential to understand the foundational challenges that underpin the entire AI compliance landscape. These four core risks are not isolated; they are deeply interconnected, and a failure in one area often precipitates a crisis in others.

Algorithmic Bias and Discrimination

One of the most insidious risks of AI is its potential to inherit, amplify, and codify human and societal biases at an unprecedented scale. AI systems learn from the data they are trained on. If that data reflects historical or systemic biases—such as gender imbalances in a particular industry or racial disparities in loan approvals—the AI model will learn these biases as objective fact and apply them in its decision-making.

This is not a theoretical concern. Real-world examples have provided stark warnings:

- Amazon’s Biased Recruiting Tool: In a well-documented case, Amazon had to scrap an AI recruiting tool after discovering it systematically penalized resumes containing the word “women’s” and favored male candidates. The model was trained on a decade of the company’s own hiring data, which reflected a male-dominated tech industry.

- Biased Healthcare Algorithms: A widely used AI algorithm in U.S. hospitals was found to be significantly biased against Black patients. The algorithm used healthcare costs as a proxy for health needs. Because Black patients historically incurred lower costs for the same level of illness (due to systemic inequities), the AI incorrectly concluded they were healthier, leading to them receiving less care.

These incidents highlight how AI can perpetuate discrimination, leading to significant legal exposure under anti-discrimination laws and severe reputational damage.

The “Black Box” Problem: Transparency and Explainability

Many advanced AI models, particularly those based on deep learning, operate as “black boxes”. This means that even their creators cannot fully articulate the precise logic, variables, and weightings the model used to arrive at a specific decision. This opacity creates profound challenges for enterprise governance:

- Accountability: If a decision cannot be explained, who is accountable for its outcome? When an AI denies a loan, flags a transaction as fraudulent, or makes a hiring recommendation, the inability to explain the “why” undermines trust and makes it impossible to validate its fairness or accuracy.

- Audits and Regulatory Scrutiny: Regulators and auditors increasingly require explainability. A “black box” approach is often insufficient for demonstrating compliance, as it prevents a thorough review of the decision-making process.

- Trust: Both customers and employees are less likely to trust a system whose reasoning is opaque, which hinders adoption and creates friction.

Data Privacy and Security

AI’s voracious appetite for data creates significant privacy and security risks. AI systems are often trained on vast datasets that may contain sensitive personal, financial, or health information. The risks are multifaceted:

- Unintentional Data Input: Employees, seeking to improve productivity, may unknowingly input sensitive customer or proprietary company data into public or unsecured AI tools. This creates an immediate data breach and potential violation of regulations like the EU’s General Data Protection Regulation (GDPR) or CCPA.

- Re-identification Risk: Even when data is “anonymized” or “de-identified,” AI’s powerful pattern-recognition capabilities can sometimes combine multiple datasets to re-identify individuals, subverting privacy safeguards.

- Cybersecurity Vulnerabilities: AI systems themselves are new targets for cyberattacks. Adversaries can attempt to “poison” training data to manipulate model outputs or exploit vulnerabilities in the AI software to gain access to the sensitive data it processes.

The Rise of “Shadow AI”

Perhaps the most urgent and pervasive threat to enterprise compliance is “Shadow AI”—the unsanctioned adoption and use of AI applications by employees outside of official IT channels. Driven by a desire for efficiency, employees are turning to a vast ecosystem of consumer-grade AI tools for daily tasks. A staggering 68% of enterprise AI usage is estimated to occur through these unofficial channels.

This creates a massive, invisible compliance gap. A developer at a mid-sized retailer provided a perfect, real-world example of this danger when they stated, “I just needed help troubleshooting a payment processing error, so I pasted the transaction log into ChatGPT”. This single action resulted in a significant Payment Card Industry Data Security Standard (PCI DSS) violation by transmitting sensitive cardholder data to an external, non-compliant AI service.

A Vicious Cycle: How One Mistake Triggers All Four Risks

The true danger of these four risks is their interconnectedness. They cannot be managed in silos.

Consider how a single, seemingly innocent employee action can trigger a compliance catastrophe:

- An employee uses a “Shadow AI” tool like a free chatbot to get help with their work, creating the first point of failure.

- In doing so, they paste sensitive customer information into the tool, causing a Data Privacy Breach.

- Because the tool is a “Black Box,” the organization has no visibility into how that data is used, stored, or protected.

- If that external model was trained on flawed data, its output could produce a biased recommendation that the company cannot explain or defend, leading to Algorithmic Bias.

This chain reaction demonstrates that a holistic, integrated governance strategy is the only effective defense against the compounding risks of enterprise AI.

The Path Forward: From Risk to Resilience

Understanding these four foundational risks is the first step toward harnessing AI’s power responsibly. A reactive, ad-hoc approach is a high-risk gamble, exposing your organization to escalating financial penalties and catastrophic reputational harm.

A proactive and holistic strategy transforms compliance from a cost center into a competitive advantage. It builds trust with customers, empowers employees to innovate safely, and creates a resilient organization capable of navigating the complex and rapidly evolving regulatory landscape. This journey requires a rare blend of technical, legal, and strategic expertise.

Ready to build a smarter, leaner, and more profitable future with AI? A robust strategy is your most critical step. For expert guidance, consider how AI strategy and implementation services from an experienced partner like di-hy.com can provide a custom roadmap to ensure your AI initiatives are powerful, compliant, and secure.